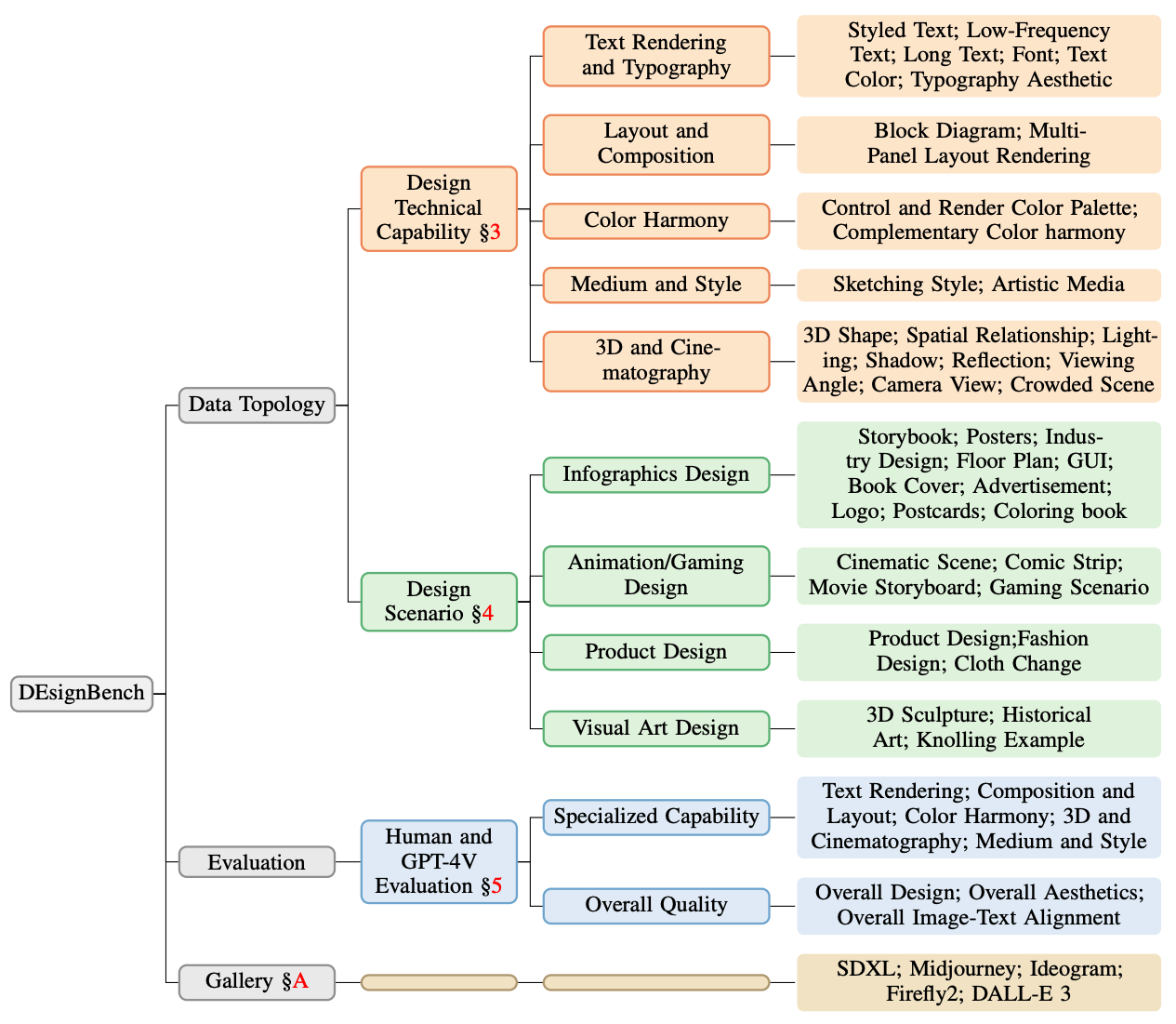

We introduce DEsignBench, a text-to-image (T2I) generation benchmark tailored for visual design scenarios. Recent T2I models like DALL-E 3 and others, have demonstrated remarkable capabilities in generating photorealistic images that align closely with textual inputs. While the allure of creating visually captivating images is undeniable, our emphasis extends beyond mere aesthetic pleasure. We aim to investigate the potential of using these powerful models in authentic design contexts. In pursuit of this goal, we develop DEsignBench, which incorporates test samples designed to assess T2I models on both “design technical capability” and “design application scenario.” Each of these two dimensions is supported by a diverse set of specific design categories. We explore DALL-E 3 together with other leading T2I models on DEsignBench, resulting in a comprehensive visual gallery for side-by-side comparisons. For DEsignBench benchmarking, we perform human evaluations on generated images in DEsignBench gallery, against the criteria of image-text alignment, visual aesthetic, and design creativity. Our evaluation also considers other specialized design capabilities, including text rendering, layout composition, color harmony, 3D design, and medium style. In addition to human evaluations, we introduce the first automatic image generation evaluator powered by GPT-4V. This evaluator provides ratings that align well with human judgments, while being easily replicable and cost-efficient.

Please see Google Sheet for DEsignBench prompts, or using this

backup downloading link, or wget https://unitab.blob.core.windows.net/data/designbench/DesignBench_Prompts.tsv

path/to/azcopy copy https://unitab.blob.core.windows.net/data/designbench [local_path] --recursive

firefly2, ideogram, SDXL, Midjourney, or dalle3 after data/designbench to download images from a specific model.

@article{lin2023designbench,

title= {DEsignBench: Exploring and Benchmarking DALL-E 3 for Imagining Visual Design},

author={Kevin Lin, Zhengyuan Yang, Linjie Li, Jianfeng Wang, Lijuan Wang},

journal={arXiv preprint arXiv:2310.15144},

year= {2023},

}

We express our gratitude to all contributors from OpenAI for their technical efforts on the DALL-E 3 project. Our sincere appreciation goes to Aditya Ramesh, Li Jing, Tim Brooks, and James Betker at OpenAI, who have provided thoughtful feedback on this work. We are profoundly thankful to Misha Bilenko for his invaluable guidance and support. We also extend heartfelt thanks to our Microsoft colleagues for their insights, with special acknowledgment to Jamie Huynh, Nguyen Bach, Ehsan Azarnasab, Faisal Ahmed, Lin Liang, Chung-Ching Lin, Ce Liu, and Zicheng Liu.

This website is adapted from Nerfies, licensed under a Creative Commons Attribution-ShareAlike 4.0 International License.